The feedforward neural network is the simplest type of artificial neural network which has lots of applications in machine learning. It was the first type of neural network ever created, and a firm understanding of this network can help you understand the more complicated architectures like convolutional or recurrent neural nets. This article is inspired by the Deep Learning Specialization course of Andrew Ng in Coursera, and I have used a similar notation to describe the neural net architecture and the related mathematical equations. This course is a very good online resource to start learning about neural nets, but since it was created for a broad range of audiences, some of the mathematical details have been omitted. In this article, I will try to derive all the mathematical equations that describe the feedforward neural net.

前馈神经网络是最简单的人工神经网络,在机器学习中有很多应用。 它是有史以来创建的第一类神经网络,对这一网络的深入了解可以帮助您理解更复杂的体系结构,例如卷积神经网络或循环神经网络。 本文的灵感来自Coursera的Andrew Ng的深度学习专业课程 ,并且我使用了类似的符号来描述神经网络架构和相关的数学方程式。 本课程是开始学习神经网络的很好的在线资源,但是由于它是为广泛的受众创建的,因此省略了一些数学细节。 在本文中,我将尝试导出描述前馈神经网络的所有数学方程式。

Notation

符号

Currently Medium supports superscripts only for numbers, and it has no support for subscripts. So to write the name of the variables, I use this notation: Every character after ^ is a superscript character and every character after _ (and before ^ if its present) is a subscript character. For example

当前,Medium仅支持数字的上标,并且不支持下标。 因此,要写出变量名,我使用这种表示法:^之后的每个字符都是上标字符,_之后的所有字符(如果存在^,则在^之前)都是下标字符。 例如

is written as w_ij^[l] in this notation.

在此符号中被写为w_ij ^ [l] 。

Neuron model

神经元模型

A neuron is the foundational unit of our brain. The brain is estimated to have around 100 billion neurons, and this massive biological network enables us to think and perceive the world around us. Basically what a neuron does is receiving information from other neurons, processing this information and sending the result to other neurons. This process is shown in Figure 1. A single neuron has some inputs which are received throughout the dendrites. These inputs are summed together in the cell body and transformed into a signal that is sent to other neurons through the axon. The axon is connected to the dendrites of other neurons by synapses. The synapse can act as a weight and make the signal passing through it stronger or weaker based on how often that connection is used.

神经元是我们大脑的基本单位。 大脑估计有大约1000亿个神经元,而这个庞大的生物网络使我们能够思考和感知周围的世界。 基本上,神经元所做的是从其他神经元接收信息,处理该信息并将结果发送给其他神经元。 此过程如图1所示。单个神经元具有一些输入,这些输入在整个树突中都被接收。 这些输入在细胞体中被加在一起,并转换成信号,该信号通过轴突发送到其他神经元。 轴突通过突触与其他神经元的树突连接。 突触可以充当权重,并根据使用该连接的频率使通过它的信号更强或更弱。

This biological understanding of the neuron can be translated into a mathematical model as shown in Figure 1. The artificial neuron takes a vector of input features x_1, x_2, . . . , x_n, and each of them is multiplied by a specific weight, w_1, w_2, . . . , w_n. The weighted inputs are summed together, and a constant value called bias (b) is added to them to produce the net input of the neuron

对神经元的这种生物学理解可以转化为如图1所示的数学模型。人工神经元采用输入特征 x_1,x_2,…的向量。 。 。 ,x_n ,并将它们分别乘以特定权重w_1,w_2,…。 。 。 ,w_n。 加权输入相加在一起,并向它们添加一个称为bias ( b )的常数,以产生神经元的净输入 。

The net input is then passed through an activation function g to produce the output a=g(z) which is then transmitted to other neurons

净输入然后通过激活函数 g产生输出a = g(z) 然后被传输到其他神经元

The activation function is chosen by the designer, but w_i and b are adjusted by some learning rule during the training process of the neural network.

激活函数由设计人员选择,但是w_i和b在神经网络的训练过程中通过某些学习规则进行调整。

Activation functions

激活功能

There are different activation functions that you can use in a neural net, and some of them which are used more commonly are discussed below.

您可以在神经网络中使用不同的激活函数,下面将讨论其中一些更常用的激活函数。

1-Binary step function

1-二阶步进功能

A binary step function is a threshold-based activation function. If the function’s input (z) is less than or equal to zero, the output of the neuron is zero and if it is above zero, the output is 1

二进制步进函数是基于阈值的激活函数。 如果函数的输入(z)小于或等于零,则神经元的输出为零;如果函数的输入(z)大于零,则输出为1

The step function is not differentiable at point z=0, and its derivative is zero at all the other points. Figure 1 shows a plot of the step function and its derivative.

阶跃函数在点z = 0处不可微,在其他所有点处其导数为零。 图1显示了阶跃函数及其导数的图。

2-Linear function

2线功能

The output of a linear activation function is equal to its input multiplied by a constant c

线性激活函数的输出等于其输入乘以常数c

This function is shown in Figure 2 (left). Its derivate is equal to c

此功能如图2(左)所示。 它的导数等于c

The use of prime for g signifies differentiation with respect to the argument which is z here. A plot of the derivative of g(z) is shown in Figure 2 (right)

对g使用质数表示相对于此处为z的自变量。 g(z)的导数图如图2所示(右)

3-Sigmoid function

3-S形功能

It is a non-linear activation function that gives a continuous output in the range of 0 to 1. It is defined as

它是一种非线性激活函数,可在0到1的范围内提供连续输出。定义为

Sigmoid has the property of being similar to the step function, however, it is continuous and prevents the jump in the output values that exists in the step function. A plot of the sigmoid is shown in Figure 3 (left).

Sigmoid具有类似于阶跃函数的特性,但是它是连续的,可以防止阶跃函数中存在的输出值跳变。 S型曲线图如图3所示(左)。

Sigmoid is a differentiable function, and since we need its derivative later, we can derive it here

Sigmoid是一个微分函数,由于我们以后需要它的导数,因此我们可以在这里导出它

Figure 3 (right) shows a plot of the derivative of the sigmoid.

图3(右)显示了S型导数的图。

3-Hyperbolic tangent function

3-双曲正切函数

It is a non-linear activation function that is similar to the sigmoid but gives a continuous output in the range of -1 to 1. It is defined as

它是一个类似于S型曲线的非线性激活函数,但是在-1到1的范围内提供连续输出。它定义为

Its derivative is

它的导数是

Figure 4 shows a plot of this function and its derivative.

图4显示了此函数及其导数的曲线图。

4-Rectified Linear Unit (ReLU) function

4-Rectified Linear Unit(ReLU)功能

ReLU is a very popular activation function in deep neural networks. It is defined as

ReLU是深度神经网络中非常流行的激活函数。 定义为

Although it looks like a linear function, ReLU is indeed a non-linear function. Figure 5 (left) shows a plot of this function.

尽管它看起来像线性函数,但ReLU确实是非线性函数。 图5(左)显示了该函数的曲线图。

Its derivate is

它的派生是

The function has a breakpoint at z=0, and its derivative is not defined at this point. But we can assume that when z is equal to 0 the derivative is either 1 or 0. It has been shown in Figure 5 (right).

该函数在z = 0处有一个断点,此时未定义其导数。 但是我们可以假设,当z等于0时,导数为1或0。如图5所示(右)。

5-Leaky ReLU function

5泄漏ReLU功能

There is another version of the ReLU called the Leaky ReLU which is defined as

ReLU的另一个版本称为Leaky ReLU,其定义为

where c is a small constant (say 0.001).

其中c是一个小常数(例如0.001)。

Here when z is negative, the function is not zero. Instead, it has a slight slope equal to c which makes its derivative bigger than zero. Its derivative is

在这里,当z为负数时,该函数不为零。 相反,它的斜率等于c ,这使其导数大于零。 它的导数是

Figure 6 shows a plot of this function and its derivative.

图6显示了该函数及其导数的曲线图。

Neural networks are often described with vectors and matrices, and this kind of matrix expression will be used throughout this article. To make sure that the reader understands the vector and matrix operations, I will review the matrix algebra in the next section before starting modeling the feedforward neural nets.

神经网络通常用向量和矩阵来描述,这种矩阵表达式将在本文中使用。 为了确保读者理解矢量和矩阵运算,在开始对前馈神经网络建模之前,我将在下一部分中回顾矩阵代数。

线性代数 (Linear algebra)

Addition and multiplication

加法和乘法

A matrix is a rectangular array of numbers or variables. In this article, we use uppercase boldface letters to represent matrices. We use [A]_ij or a_ij to denote the element of matrix A at row i and column j. For example

矩阵是数字或变量的矩形阵列。 在本文中,我们使用大写的粗体字母表示矩阵。 我们使用[A] _ij或a_ij来表示矩阵A的元素,在第i行和第j列。 例如

and

和

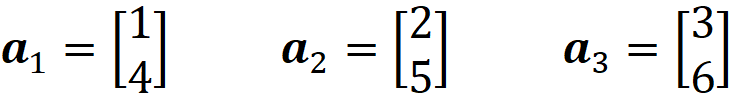

A vector is a matrix with a single row or column. The elements of a vector are usually identified by a single subscript. As a convention, we use lowercase boldface letters for column vectors. For example, x is a column vector

向量是具有单个行或列的矩阵。 向量的元素通常由单个下标标识。 按照惯例,我们将小写粗体字母用于列向量。 例如, x是列向量

We use [x]_i or x_i to denote the i-th element of vector x.

我们使用[ x ] _i或x_i表示向量x的第i个元素。

We can add vectors if they have the same number of elements by adding their corresponding elements. So if a and b both have n elements, c = a+b is also a vector with n elements and is defined as

如果矢量具有相同数量的元素,我们可以通过添加其对应的元素来添加矢量。 因此,如果a和b都具有n个元素,则c = a + b也是具有n个元素的向量,并定义为

Similarly, we can add two matrices if they have the same shape. If A and B are both m×n matrices, then C=A+B is an m×n matrix defined as

同样,如果两个矩阵具有相同的形状,则可以将它们相加。 如果A和B都是m × n矩阵,则C = A + B是m × n矩阵,定义为

In linear algebra, the addition of a matrix and a vector is not defined, however, in deep learning, the addition of a matrix and a vector is allowed. If A is an m×n matrix and b is a column vector with m elements, then C=A+b yields an m×n matrix and is defined as

在线性代数中,没有定义矩阵和向量的相加,但是,在深度学习中,允许矩阵和向量的相加。 如果A是一个 m × n矩阵,并且b是具有m个元素的列向量,则C = A + b产生一个 m × n矩阵,定义为

So b is added to each column of A. This form of addition is generally called a broadcasting operation.

因此b被添加到A的每一列。 这种加法形式通常称为广播操作。

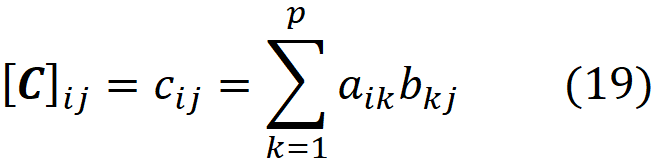

If A is an m×p matrix and B is a p×n matrix, the matrix product C=AB (which is an m×n matrix) is defined as

如果A是一个m × p矩阵,而B是一个p × n矩阵,则矩阵乘积C = AB (它是一个m × n矩阵)定义为

Transpose

转置

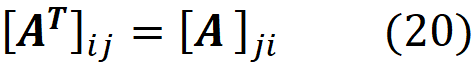

The transpose of an m×n matrix A (which is shown by A superscript T) is an n×m matrix whose columns are formed from the corresponding rows of A. For example the transpose of A in Eq. 14 is

m × n矩阵A (由A上标T表示 )的转置是n × m矩阵,其列由A的对应行形成。 例如, A在等式中的转置。 14是

The transpose of a row vector becomes a column vector with the same number of elements and vice versa. As a convention, we assume that all vectors are column vectors, so we show a row vector as the transpose of a column vector. For example, x^T is a row vector which is the transpose of the column vector x in Eq. 15

行向量的转置变成具有相同元素数的列向量,反之亦然。 按照惯例,我们假设所有向量都是列向量,因此我们将行向量显示为列向量的转置。 例如, x ^ T是一个行向量,它是列向量x在等式中的转置。 15

In fact, the element in the i-th row and j-th column of the transposed matrix is equal to the element in the j-th row and i-th column of the original matrix. So

实际上,转置矩阵的第i行和第j列中的元素等于原始矩阵的第j行和第i列中的元素。 所以

Transpose has some important properties. First, the transpose of the transpose of A is A

转置具有一些重要的属性。 首先, A的转置是A

In addition, the transpose of a product is the product of the transposes in the reverse order

另外,产品的转置是相反顺序的转置的乘积

To prove it remember the matrix multiplication (Eq. 19). Now based on the definition of matrix transpose (Eq. 20), the left side is

为了证明这一点,请记住矩阵乘法(公式19)。 现在根据矩阵转置的定义(等式20),左侧为

and the right side is

右边是

So both sides of the equation are equal.

因此,方程式的两边都相等。

Dot product

点积

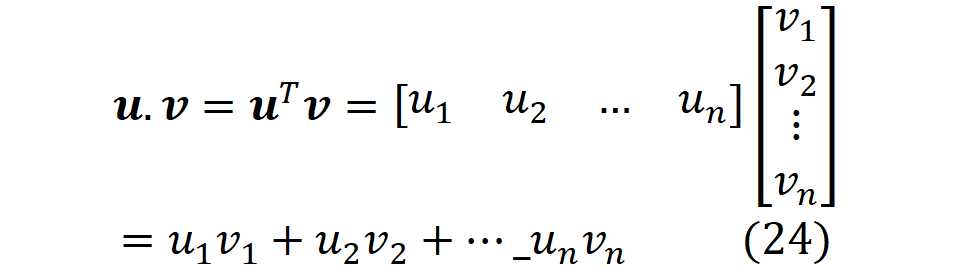

If we have two vectors u and v

如果我们有两个向量u和v

The dot product (or inner product) of these vectors is defined as the transpose of u multiplied by v

这些向量的点积(或内积)定义为u的转置乘以v

Based on this definition the dot product is commutative so

根据这个定义,点积是可交换的,因此

If we multiply a column vector u (with m elements) by a row vector v^T (with n elements), the result is an m×n matrix

如果我们将列向量u (包含m个元素)乘以行向量v ^ T (包含n个元素),则结果为m × n矩阵

which is a special case of the matrix multiplication rule assuming that u is a matrix with only one column and v is a matrix with only one row (Eq. 19).

这是矩阵乘法规则的一种特殊情况,假设u是只有一列的矩阵,而v是只有一行的矩阵(等式19)。

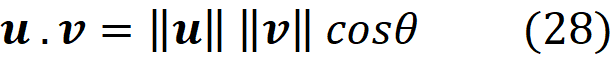

The length (also called the 2-norm) of a vector u with n elements is defined as

具有n个元素的向量u的长度(也称为2范数)定义为

The dot product can be also written in terms of the length of the vectors. If the angle between two vectors u and v is θ, then their dot product can be also written as

点积也可以根据向量的长度来写。 如果两个向量u和v之间的夹角为θ,则它们的点积也可以写成

We know that the maximum value of cosine is 1 at θ=0⁰ and its minimum value is -1 at θ=180⁰. So if we fix the length of u and v, the maximum of their dot product occurs when they have the same direction (θ=0⁰), and the minimum of their dot product occurs when they have opposite directions (θ=180⁰).

我们知道,余弦的最大值为θ=0⁰为1,其最小值为-1,θ=180⁰。 因此,如果我们固定u和v的长度,则当它们具有相同的方向( θ =0⁰)时,它们的点积最大,而当它们具有相反的方向( θ =180⁰)时,它们的点积最小。

Partitioned matrices

分区矩阵

A large matrix can be divided into submatrices or blocks. The blocks can be treated as if they were the elements of the matrix, so the partitioned matrix becomes a matrix of matrices. For example, the matrix

大矩阵可以分为子矩阵或块。 可以将这些块视为它们是矩阵的元素,因此分区后的矩阵成为矩阵矩阵。 例如矩阵

can be also written as

也可以写成

where

哪里

So we can think of each column of A as a column vector, and A can be thought of as a matrix with just one row. Similarly, we can write A as

因此,我们可以将A的每一列都视为列向量,并且A可以被视为只有一行的矩阵。 同样,我们可以将A写为

where

哪里

So A can be thought of as a matrix with just one column. Each row of A is now a row vector and each of these row vectors is the transpose of a column vector. When multiplying the partitioned matrices, you can treat them like a regular matrix. For example, if we partition matrix A as a column vector

因此,可以将A视为只有一列的矩阵。 现在, A的每一行都是一个行向量,而这些行向量中的每一个都是列向量的转置。 当将分区矩阵相乘时,可以将它们视为常规矩阵。 例如,如果我们将矩阵A划分为列向量

and matrix B as a row vector

和矩阵B作为行向量

Then multiplying A by B gives

然后将A乘以B得到

So each element of AB is the dot product of the submatrix (which is a row vector) in the same row of A by the submatrix in the same column of B (which is a column vector). As a special case, multiplying the partitioned matrix A by the column vector c gives

所以AB的每个元素是子矩阵的点积(这是一个行向量)在同一行A在通过在B的相同的列(这是一个列向量)的子矩阵。 作为一种特殊情况,将分区矩阵A与列向量c相乘得出

Ac is a partitioned matrix treated like a column vector and each element of this vector is the dot product of the submatrix in the same row of A (which is a row vector) by the column vector c. Similarly, suppose that we want to multiply matrix A by the partitioned matrix B which only has one row and each of its elements is a column vector. We can write

Ac是像列向量一样对待的分区矩阵,该向量的每个元素 是A的同一行(它是行向量)中子矩阵与列向量c的点积。 类似地,假设我们想将矩阵A乘以仅具有一行并且每个元素都是列向量的分区矩阵B。 我们可以写

Hadamard product

哈达玛产品

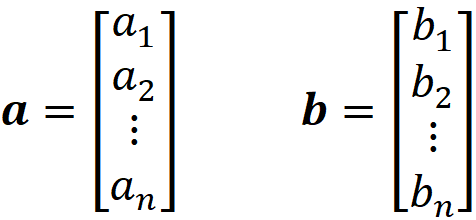

Suppose a and b are two vectors of the same dimension

假设a和b是两个相同维的向量

Then the Hadamard product of a and b is defined as the element-wise product of these vectors

然后将a和b的Hadamard乘积定义为这些向量的逐元素乘积

So we have

所以我们有

The Hadamard product of two matrices A and B of the same dimension m×n is a matrix of the same dimension and is defined as

m × n的两个矩阵A和B的Hadamard乘积是一个相同维度的矩阵,定义为

So it is the element-wise product of them

所以这是它们的元素智慧产品

Vectorized functions

向量化功能

A function can take a vector or matrix and return a scalar. For example, if x is a vector and y is a scalar, then we can define a function like this

函数可以采用向量或矩阵并返回标量。 例如,如果x是一个向量, y是一个标量,那么我们可以定义一个这样的函数

In addition, it is also possible to have a function that takes a matrix and returns another matrix. In this article, we call it a vectorized function. Suppose that A is an m×n matrix and f is a function that takes a scalar and returns another scalar (for example f: R -> R). Here the notation f(A) denotes the elementwise application of f to A

此外,还可以具有接受一个矩阵并返回另一个矩阵的函数。 在本文中,我们称其为向量化函数 。 假设A是一个m × n矩阵, f是一个接受标量并返回另一个标量的函数(例如f : R- > R )。 这里的符号f ( A )表示f对A的元素式应用

A similar equation can be written for a vector. So by simply looking at a function like f(x), we can not say if it returns a scalar, a vector, or a matrix unless know how it has been defined.

可以为向量写一个类似的方程式。 因此,仅看f( x )之类的函数,除非知道如何定义,否则无法说出它是否返回标量,向量或矩阵。

Matrix calculus

矩阵演算

When studying neural networks, we are dealing with multivariable functions, so we should be familiar with multivariable calculus. Suppose that f: R^n->R is a multivariable function

在研究神经网络时,我们正在处理多元函数,因此我们应该熟悉多元演算。 假设f:R ^ n-> R是一个多变量函数

So f has an n-dimensional input, but it’s a scalar function which means its range is one-dimensional (its output is a scalar quantity).

因此, f具有n维输入,但它是一个标量函数 ,这意味着其范围是一维的(其输出是标量)。

The partial derivative of a multivariable function is its derivative with respect to one of those variables, with the others held constant. In fact, the partial derivative of f with respect to x_i

多变量函数的偏导数是相对于这些变量之一的导数,而其他变量则保持不变。 实际上, f关于x_i的偏导数

measures how f changes as only the variable x_i increases at point (x_1, x_2, …, x_i, …x_n).

测量仅当变量x_i在点( x_1,x_2,…,x_i,…x_n )增大时f如何变化。

The total differential of a multivariable function is the sum of its partial differentials arising from the separate variation of the variables

多变量函数的总微分是由变量的单独变化引起的偏微分之和

The total differential measures how f changes as all the variables increase together at point (x_1, x_2, .., x_i, …x_n).

总微分测量f随着所有变量在点( x_1,x_2,..,x_i,…x_n )一起增加而如何变化。

The chain rule in calculus is used to compute the derivative of a function which is a composition of other functions whose derivatives are known. Suppose that x, y, and z are scalar variables, and f and g are differentiable functions. Assume that y = g(x) and z = f(y) = f(g(x)) . Then based on the chain we have

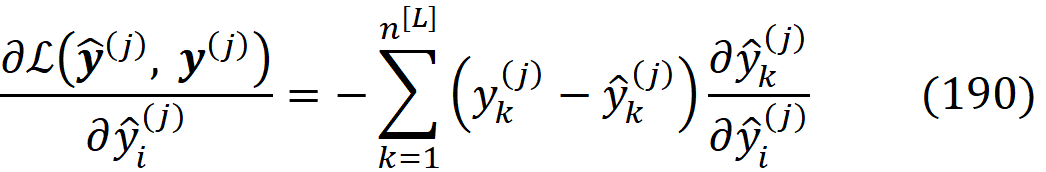

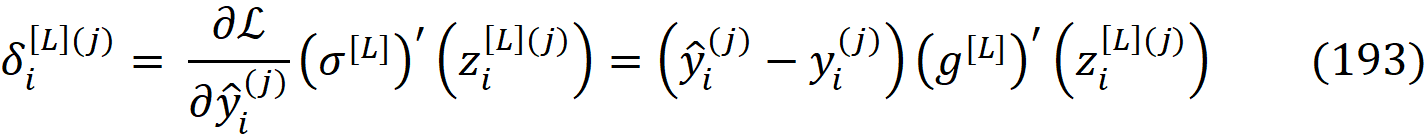

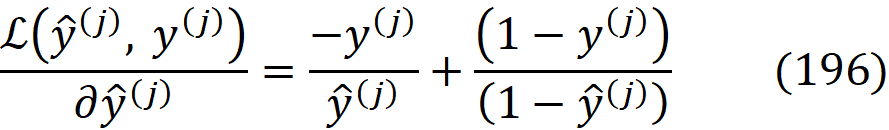

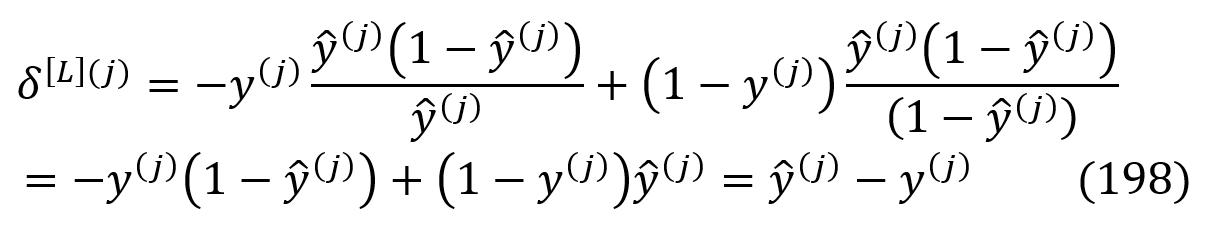

微积分中的链式规则用于计算函数的导数,该函数是导数已知的其他函数的组合。 假设x , y和z是标量变量,而f和g是可微函数 。 假设y = g(x)和z = f(y)= f(g(x)) 。 然后基于链,我们有

We can also generalize the chain rule to vectors. Suppose that x, and y are vectors and z is a scalar. Assume that y = g(x) and z = f(y) = f(g(x)). Now we have

我们还可以将链式规则概括为向量。 假设x和y是向量, z是标量。 假设y = g( x )和z = f( y )= f(g( x )) 。 现在我们有

Gradient

梯度

Suppose that f is a function. The gradient of f with respect to vector x is defined as

假设f是一个函数。 f相对于向量x的梯度定义为

So the gradient of f is a vector, and each element i of the gradient is the partial derivative of f with respect to x_i

因此f的梯度是一个向量,并且梯度的每个元素i都是f相对于x_i的偏导数

Gradient generalizes the concept of the derivative, and the gradient of f is like the derivative of f with respect to a vector. We can also take the derivative of a function with respect to a matrix. Suppose that X is an m×n matrix and g is a scalar function. The gradient of g with respect to matrix X is a matrix defined as

梯度概括了导数的概念,并且f的梯度类似于f相对于向量的导数。 我们还可以对矩阵求函数的导数。 假设X是一个m × n矩阵,而g是一个标量函数。 g相对于矩阵X的梯度是定义为

which means that

意思就是

In addition, we can define the derivative of vector y (with n elements) with respect to another vector x (with m elements)

另外,我们可以定义向量y (具有n个元素)相对于另一个向量x (具有m个元素)的导数

which means that

意思就是

Neuron model

神经元模型

Now we can express the neuron model equations in vector form. The neuron’s input is the vector

现在我们可以向量形式表达神经元模型方程。 神经元的输入是向量

and weights of the neuron can be shown by the vector

向量可以显示神经元的权重

The dot product of x and w gives z

x和w的点积为z

The output or the activation of the neuron is

神经元的输出或激活是

where w and b are the adjustable parameters of the neuron.

其中w和b是神经元的可调参数。

Supervised learning

监督学习

A training set is defined as

训练集定义为

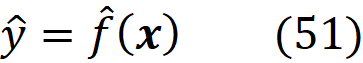

and each pair (x^(i), y^(i)) is called a training example. Here we use a number inside the parenthesis () as a superscript to refer to the training example number, so m is the number of the training examples in the training set. x^(i) is a called the feature vector or the input vector of the training example i. It is a vector of numbers and each element of this vector is called a feature. Each x^(i) corresponds to a label y^(i). We assume there is an unknown function y= f(x) that maps the feature vectors to the labels, so y^(i)= f(x^(i)). Now the goal of supervised learning is to use the above training set to learn or approximate f. In other words, we want to use the training set to estimate f with another function fhat and then predict the labels using

每对( x ^(i) , y ^(i))称为训练示例 。 在这里,我们使用括号()内的数字作为上标来引用训练示例编号,因此m是训练集中的训练示例编号。 x ^(i)是训练样本i的特征向量或输入向量 。 它是数字的向量,该向量的每个元素称为特征。 每个x ^(i)对应于标签y ^(i)。 我们假定存在一个未知函数y = f( x ) ,该函数将特征向量映射到标签,因此y ^(i)= f( x ^(i)) 。 现在,监督学习的目标是使用上述训练集学习或近似f 。 换句话说,我们要使用训练集来估计f与另一个函数fhat ,然后使用

where the hat symbol denotes an estimate. We want fhat(x) to be close to f(x) not only for the input vectors in the training set (x^(i)) but also for novel input vectors which are not present in the training set.

帽子符号表示估算值。 我们希望不仅对于训练集中的输入向量( x ^(i) ),而且对于训练集中不存在的新输入向量, fhat(x)都接近f(x) 。

When the label is a numerical variable, we call the learning problem a regression problem, and when it is a categorical variable, the problem is known as classification. In classification, the label is a categorical variable which can be represented by the finite set y^(i) ∈ {1, 2, . . . , c}, where each number is a class label, and c is the number of classes (the class labels can be anything, but you can always assign a number to them like this). If c = 2 and the class labels are mutually exclusive (it means that each input can only belong to one of the classes), we call it a binary classification. An example is medical testing to determine if a patient has a certain disease or not (Figure 7 top).

当标签是数字变量时,我们将学习问题称为回归问题,而当它是分类变量时,该问题称为分类 。 在分类中,标签是一个分类变量,可以用有限集y ^(i) ∈{1,2,…表示。 。 。 , c },其中每个数字是一个类标签,而c是类的数量(类标签可以是任何东西,但是您始终可以像这样为它们分配一个数字)。 如果c = 2并且类别标签是互斥的(这意味着每个输入只能属于一个类别),我们称其为二进制分类 。 一个示例是进行医学测试以确定患者是否患有某种疾病(图7顶部)。

If c > 2 and the class labels are mutually exclusive, it is called multiclass classification. For example, suppose that we want to detect three animals in an image: A dog, a cat, and a panda. But in each image, we can only have one animal. So the labels are mutually exclusive, and this is a multiclass classification problem (Figure 7 middle). In a multiclass problem, each training example is a pair

如果c > 2并且类标签是互斥的,则称为多类分类。 例如,假设我们要检测图像中的三种动物:狗,猫和熊猫。 但是在每个图像中,我们只能有一只动物。 因此标签是互斥的,这是一个多类 分类问题(图7中)。 在多类问题中,每个训练示例都是一对

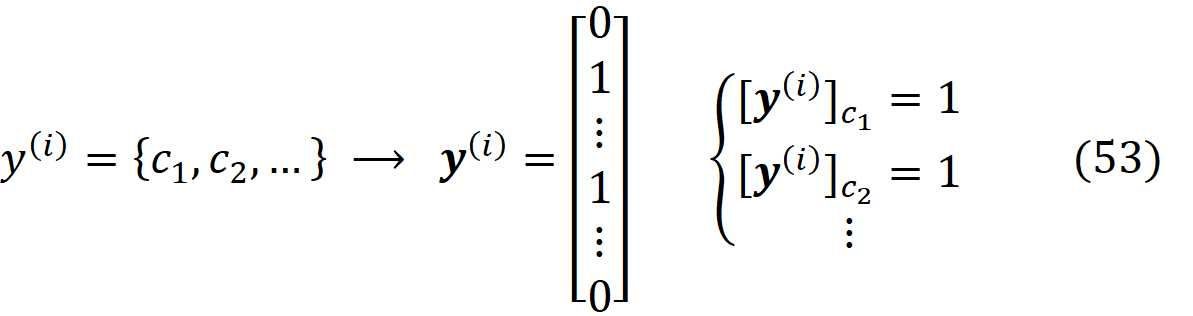

We will use a method called one-hot encoding to convert these class numbers into binary values. We convert the scalar label y to a vector y which has c elements. When y is equal to k, the k-th element of y will be one and all other elements will be zero. In fact, the i-th element of y is signaling the presence or absence of class i for the input vector of the example. In each label vector, only one element can be equal to one, and the others should be zero. So for each x^(i) we have a label vector y^(i) with c elements

我们将使用一种称为“ 一次热编码”的方法将这些类号转换为二进制值。 我们将标量标签y转换为具有c个元素的向量y 。 当y等于k时 , y的第k个元素将为1,而所有其他元素将为零。 实际上, y的第i个元素表示该示例的输入向量是否存在类i 。 在每个标记向量中,只有一个元素可以等于一个,其他元素应该为零。 因此,对于每个x ^ (i),我们都有一个带有c个元素的标签向量y ^ (i)

Now our training set can be defined as {x^(i), y^(i)}. If the class labels are not mutually exclusive, we call it multilabel classification. Suppose that in the image classification of animals mentioned before, each of them can be present in the image independently. For example, we can have both a dog and a cat in the same image. So the labels are not mutually exclusive anymore, and now we have a multilabel classification problem (Figure 7 bottom).

现在我们的训练集可以定义为{ x ^(i), y ^(i) }。 如果类标签不是互斥的,则称其为multilabel分类 。 假设在前面提到的动物的图像分类中,每种动物都可以独立存在于图像中。 例如,我们可以在同一张图片中同时拥有一只狗和一只猫。 因此标签不再互斥,现在我们遇到了一个多标签分类问题(图7底部)。

In multilabel classification, each class is considered a separate label, and each y^{i} can take a set of classes that are present for that training example. We can use multi-hot encoding to convert these class numbers into binary values. Again, we convert the scalar label y to a vector y which has c elements. The i-th element of y is signaling the presence or absence of class i, so when a class is present y_i=1 and when it is absent y_i=0. However, in each label vector, more than one element can be equal to one since the classes are not mutually exclusive anymore.

在多标签分类中,每个类别都被视为一个单独的标签,每个y ^ {i}都可以采用针对该训练示例的一组类别。 我们可以使用多次编码将这些类号转换为二进制值。 同样,我们将标量标签y转换为具有c个元素的向量y 。 y的第i个元素表示类i的存在或不存在,因此当类存在时y_i = 1,而当类不存在y_i = 0。 但是,在每个标记向量中,一个以上的元素可以等于一个,因为这些类不再相互排斥。

So our training set can be defined as

所以我们的训练集可以定义为

{x^(i), y^(i)}

{ x ^(i), y ^(i) }

A layer of neurons

一层神经元

A single neuron is a simple computational unit, and to learn complex patterns, we commonly need lots of them to work together. A layer of neurons consists of some neurons operating in parallel. The neurons in each layer are supposed to work at the same time but independently. We can think of the raw data as a separate layer and call it the input layer (Figure 8).

单个神经元是一个简单的计算单元,要学习复杂的模式,我们通常需要大量的神经元才能一起工作。 神经元层由一些并行运行的神经元组成。 每层中的神经元应该同时但独立地工作。 我们可以将原始数据视为一个单独的层,并将其称为输入层 (图8)。

When we count the layers in neural networks, we don’t include the input layer. So the next layer after the input layer is layer 1. We use a number inside square brackets [] as a superscript to indicate the layer number. So the output or activation of the second neuron in layer one is

当我们计算神经网络中的层数时,我们不包括输入层。 因此,输入层之后的下一层是第1层。我们在方括号[]中使用数字作为上标来指示层号。 因此,第一层中第二个神经元的输出或激活是

The number of neurons in each layer is denoted by n. So the number of neurons in the first layer is

每层中神经元的数量用n表示。 所以第一层的神经元数是

and the activation of the last neuron in the first layer will be

而第一层中最后一个神经元的激活将是

For the input layer, the layer number is assumed to be zero, so the number of input features is

对于输入层,假定层号为零,因此输入要素的数量为

The wights for the neuron i in the first layer can be represented by the vector

第一层中神经元i的权重可以用向量表示

Here

这里

represents the weight for the input feature j which goes into neuron i in layer 1 (Figure 9).

表示第1层进入神经元i的输入特征j的权重(图9)。

We can calculate the activations of the first layer using the weight and input vectors. We can use Eqs. 49 and 50 to calculate the activation of neuron i in layer 1

我们可以使用权重和输入向量来计算第一层的激活。 我们可以使用等式。 49和50来计算第1层中神经元i的激活

where b_i the bias for neuron i in layer 1, and g^[1] is the activation function for each neuron in layer 1. The activation function receives a scalar input (the net input of neuron) and returns another scalar which is the neuron activation.

其中b_i是第1层中神经元i的偏置,而g ^ [1]是第1层中每个神经元的激活函数。激活函数接收标量输入(神经元的净输入)并返回另一个标量,即神经元激活。

Feedforward neural networks

前馈神经网络

Now we can consider a network with several layers. The last layer of the network is called the output layer, and if there are any layers in between, we call them the hidden layers (Figure 10).

现在我们可以考虑一个具有多层的网络。 网络的最后一层称为输出层 ,如果它们之间有任何层,我们将它们称为隐藏层 (图10)。

In a feedforward network, the information moves only in the forward direction, from the input layer, through the hidden layers (if they exist), and to the output layer. There are no cycles or loops in this network. Feedforward neural networks are sometimes ambiguously called multilayer perceptrons. The number of neurons in layer l is denoted by

在前馈网络中,信息仅在前向方向上移动,从输入层到隐藏层(如果存在),再到输出层。 该网络中没有循环或循环。 前馈神经网络有时被模糊地称为多层感知器 。 第1层中的神经元数量用下式表示

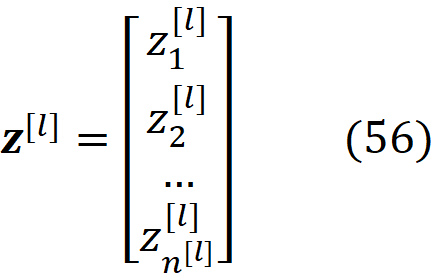

The net input of neurons in layer l can be represented by the vector

l层中神经元的净输入可以由向量表示

Similarly, the activation of neurons in layer l can be represented by the activation vector

同样,第1层中神经元的激活可以由激活向量表示

and the wights for the neuron i in layer l can be represented by the vector

并且第l层中神经元i的权重可以由向量表示

where

哪里

represents the weight for the input j (coming from neuron j in layer l-1) going into neuron i in layer l (Figure 11).

表示输入j的权重(来自l-1层的神经元j )进入l层的神经元i (图11)。

As you see in Figure 11, in layer l, all the inputs are connected to all neurons in that layer. Such a layer is called a dense or fully connected layer.

如图11所示,在第1层中,所有输入都连接到该层中的所有神经元。 这种层称为致密 层或完全连接层 。

Now we can calculate the activation of a neuron in layer l using its weight and input vectors

现在,我们可以使用层的权重和输入矢量来计算第1层中神经元的激活

where b_i^[l] is the bias for neuron i in layer l, and σ^[l] is the activation function for each neuron in layer l. It is important to note that each layer can have a different activation function, but the neurons in one layer usually have the same activation function. To have a consistent notation we can assume that

其中b_i ^ [l]是第l层中神经元i的偏差,而σ^ [l]是第l层中每个神经元的激活函数。 重要的是要注意,每一层可以具有不同的激活功能,但是一层中的神经元通常具有相同的激活功能。 为了具有一致的符号,我们可以假设

So when l=1, Eq. 60 is converted to Eq. 55 and we don’t need to write it separately. Now Eq. 60 can be used for all the layers of the network.

所以当l = 1时 60转换为Eq。 55,我们不需要单独编写。 现在等式 60可以用于网络的所有层。

Vectorizing the activations

向量化激活

We can combine all the weights of a layer into a weight matrix for that layer

我们可以将图层的所有权重合并为该图层的权重矩阵

The i-th element of this partitioned matrix is a row vector, and this row vector is the transpose of a column vector which gives the weights for neuron i in layer l. If we expand this matrix we get

该分区矩阵的第i个元素是行向量,而该行向量是列向量的转置,列向量给出了层1中神经元i的权重。 如果扩展这个矩阵,我们得到

So the (i,j) element of this matrix gives the weight of the connection that goes from the neuron j in layer l-1 to the neuron i in layer l. We can also have a bias vector

所以该矩阵的(I,J)元素给出从神经元Ĵ进去层L-1的神经元i在层L中的连接的权重。 我们也可以有一个偏向向量

in which the i-th element is the bias for the neuron i in layer l. Now using Eqs. 30 and 59, we can write

其中第i个元素是层1中神经元i的偏置。 现在使用Eqs。 30和59,我们可以写

Please note that in the first line of Eq. 65 we added the bias vector to a matrix which is the broadcasting addition defined in Eq. 18.

请注意,在等式的第一行。 在图65中,我们将偏置矢量添加到矩阵中,该矩阵是等式6中定义的广播相加。 18岁

If we apply the vectorized activation function (recall Eq. 37) in layer l to the previous equation, using Eqs. 57 and 60 we get

如果我们使用等式将第1层中的矢量化激活函数(调用等式37)应用于先前的等式。 57和60我们得到

Now by rearranging the previous equation we finally have

现在,通过重新排列前面的等式,我们终于有了

Eq. 67 is the forward propagation equation for a feedforward neural network. Using this equation we can compute the activations of a layer using the activations of the previous layer. If we apply Eqs. 65 and 67 to the first layer (l=1), then the previous layer is the input layer (Eq. 61), so we have

等式 67是前馈神经网络的正向传播方程。 使用此等式,我们可以使用上一层的激活来计算层的激活。 如果我们应用等式。 65和67到第一层( l = 1),那么前一层是输入层(等式61),所以我们有

Vectorization over input vectors

输入向量的向量化

Now suppose that we have a training set with m examples. So we have a set of input vectors for the whole training set

现在假设我们有一个包含m个示例的训练集。 因此,我们为整个训练集提供了一组输入向量

Each example has n^[0] input features as before

每个示例都像以前一样具有n ^ [0]个输入功能

We can now combine all the input vectors in the training set to have an input matrix

现在,我们可以将训练集中的所有输入向量组合起来,得到一个输入矩阵

Each element of this partitioned matrix is a column vector and is equal to the input vector for the i-th example of the training set, so each element of this matrix is

该划分矩阵的每个元素都是列向量,并且等于训练集第i个示例的输入向量,因此此矩阵的每个元素为

Each of these examples can be used as the input layer for the neural net and we can use Eqs. 65 and 67 to predict the activations of each layer for each training example.

这些示例中的每一个都可以用作神经网络的输入层,我们可以使用等式。 参考图65和67预测每个训练示例的每个层的激活。

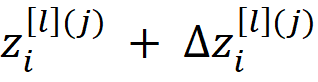

Note that the superscript [l], refers to the layer number, and the superscript in parenthesis (j) refers to the training example number. Eq. 73 can be also written as

注意,上标[ l ]表示层号,并且括号( j )中的上标表示训练示例号。 等式 73也可以写成

So these equations give the net input and activation vector of layer l for the training example number j. For the first layer, we can write (using Eqs. 68 and 69)

因此,这些方程式给出了训练示例编号j的层l的净输入和激活向量。 对于第一层,我们可以编写(使用公式68和69)

We can also write

我们也可以写

In the first line of Eq. 77 we used Eq. 31 to do the multiplication, and in the third line, we used Eq. 75 to simplify it. We can define the net input matrix as

在等式的第一行。 77我们使用等式。 31做乘法,在第三行中,我们使用等式。 75简化它。 我们可以定义净 输入矩阵为

So we have

所以我们有

Here the i-th column of Z^[l] is the net input of layer l for the training example number i. Similarly, we can define the activation matrix as

在此, Z ^ [ l ]的第i列是训练示例编号i的层l的净输入。 同样,我们可以将激活矩阵定义为

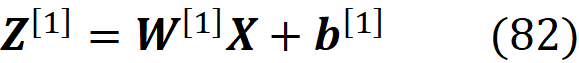

Now we can use Eq. 78 (with l=1) to rewrite Eq. 77 as

现在我们可以使用Eq。 78( l = 1)重写等式。 77作为

If we apply the vectorized activation function to Eq. 78, using Eqs. 76 and 80 we get

如果我们将向量化激活函数应用于方程式。 78,使用等式。 76和80我们得到

and by combining Eqs. 82 and 83 (with l=1), we have

并结合方程式 82和83(其中l = 1),我们有

We can also use Eqs. 31, 73, 78, and 80 to write

我们也可以使用等式。 要写入的31、73、78和80

and by combining Eqs. 83 and 85, we finally have

并结合方程式 83和85,我们终于有了

This equation is the vectorized forward propagation equation for the whole training set. We can also assume that

该方程是整个训练集的矢量化正向传播方程。 我们还可以假设

So Eq. 86 is converted to Eq. 84 when l=1.

等式 86转换为Eq。 当l = 1时为 84。

Linear vs non-linear activations

线性与非线性激活

Among the activation functions that were introduced before, only the linear activation has a linear relationship with the net input (that is why we call it a linear activation!). But why do we need the nonlinear activation functions? Suppose that all the neurons in layers l-1 and l have linear activation functions. For layer l-1 we can use Eq. 74 and the definition for the linear activation function (Eq. 4) to write

在之前介绍的激活函数中,只有线性激活与净输入具有线性关系(这就是为什么我们称其为线性激活!)。 但是为什么我们需要非线性激活函数呢? 假设层l-1和l中的所有神经元都具有线性激活功能。 对于l-1层,我们可以使用等式。 74和线性激活函数的定义(等式4)编写

We can now use this equation to write the activation vector of layer l

现在,我们可以使用该公式来编写第l层的激活向量

This equation suggests that we can merge layers l-1 and l into one layer with linear activations. It takes a^[l-2] and returns a^[l]. The weight matrix of this new layer is W^[l]W^[l-1], its bias vector is W^[l]b^[l-1]+b^[l], and its activation function is g(z)=c²z. Now suppose that all the layers of the network have linear activations. Then we can merge all of them into one linear layer. So the network behaves like a single layer neural net, and such a network is not a good choice to learn nonlinear data. As a result, the nonlinear activation functions are essential ingredients of multilayer neural networks.

该方程式表明我们可以通过线性激活将l-1和l层合并为一层。 它取一个 ^ [l-2]并返回一个 ^ [l]。 该新层的权重矩阵为W ^ [l] W ^ [l-1] ,其偏置矢量为W ^ [l] b ^ [l-1] + b ^ [l] , 其激活函数为g(z)=c²z 。 现在假设网络的所有层都具有线性激活。 然后,我们可以将它们全部合并为一个线性层。 因此,该网络的行为就像单层神经网络一样,对于学习非线性数据来说,这种网络不是一个好的选择。 结果,非线性激活函数是多层神经网络的基本组成部分。

Output layer

输出层

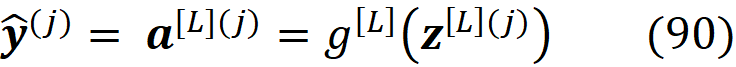

Remember that a^[L] was the activation vector of the last layer of the neural network. However, we usually use the vector yhat for the activation of the output layer since it is the final output of the network. So for the example j, we have

请记住 ,^ [L]是神经网络的最后一层的活化载体。 但是,我们通常使用矢量yhat来激活输出层,因为它是网络的最终输出。 因此,对于示例j,我们有

For a regression problem, we have a real value label for each training example, so we usually use a single neuron with a linear activation function. As mentioned before, we have three types of classification problems: binary, multiclass, and multilabel. For each type, we use a different layout for the output layer. Suppose that we have m examples and each example has n^[0] features. In addition, suppose that we have c classes for the labels. So each training example is a pair

对于回归问题,我们在每个训练示例中都有一个实际值标签,因此我们通常使用具有线性激活函数的单个神经元。 如前所述,我们有三种类型的分类问题:二进制,多类和多标签。 对于每种类型,我们对输出层使用不同的布局。 假设我们有m个示例,每个示例都有n ^ [0]个特征。 另外,假设我们为标签提供了c类。 因此,每个训练示例都是一对

If we have a binary classification problem, then c=2 and the classes are mutually exclusive. In this case, we use a single neuron in the output layer with a sigmoid activation function. We usually use a sigmoid activation function for the output layer since its output is in range of [0,1], and we can interpret it as the probability of its corresponding class. One problem is that most of the activation functions give a continuous output not a binary one. So we need a way to interpret the raw output of the activation function as a binary output. We can define a threshold of 0.5. If the output value for an example is less than or equal to 0.5 it means the example belongs to class 1 and if it is greater than 0.5, it means that it belongs to class 2. This is shown in Figure 12.

如果我们有一个二进制分类问题,那么c = 2并且这些类是互斥的。 在这种情况下,我们在输出层中使用具有S型激活功能的单个神经元。 由于输出层的输出在[0,1]范围内,因此我们通常对输出层使用S型激活函数,并且可以将其解释为对应类的概率。 一个问题是,大多数激活函数都提供连续输出而不是二进制输出。 因此,我们需要一种将激活函数的原始输出解释为二进制输出的方法。 我们可以将阈值定义为0.5。 如果示例的输出值小于或等于0.5,则表示该示例属于1类;如果示例的输出值大于0.5,则表示该示例属于2类。如图12所示。

If we have a multilabel problem, then c≥2, and the classes are not mutually exclusive. We use multi-hot encoding to convert y^(i) to the vector y^(i) which has c elements (Eq. 53). Now our output layer should have c neurons with sigmoid activation each giving the value of one of the elements of y^(i). In fact, the activation of each neuron is indicating whether the input belongs to a certain class or not. So the number of elements of y^(i) is equal to the number of the neurons in the last layer n^[L]. We can still apply the 0.5 thresholds to each neuron to convert the raw activation vector into the binary output which a multi-hot encoded vector (Figure 13).

如果我们有一个多标记问题,则c≥2,及其类别并不相互排斥。 我们使用多重热编码将y ^(i)转换为具有c个元素的向量y ^(i) (等式53)。 现在,我们的输出层应该具有c个具有S型激活的神经元,每个神经元都给出y ^(i)元素之一的值。 实际上,每个神经元的激活指示输入是否属于某个类别。 因此y ^(i)的元素数量等于最后一层n ^ [L]中的神经元数量。 我们仍然可以将0.5个阈值应用于每个神经元,以将原始激活向量转换为二进制输出,即多热编码向量(图13)。

If we have a multiclass problem, then c>2, and the classes are mutually exclusive. Here we use one-hot encoding to convert y^(i) to the vector y^(i) which has c elements (Eq. 52). So for each x^(i), we have a label vector y^(i)

如果存在多类问题,则c> 2,并且这些类是互斥的。 在这里,我们使用单热编码将y ^(i)转换为具有c个元素的向量y ^(i) (等式52)。 因此,对于每个x ^ (i),我们都有一个标签向量y ^ (i)

Now our training set can be defined as

现在我们的训练集可以定义为

Now our output layer should have c neurons, so n^[L]=c, and the activation of each neuron is indicating whether the input belongs to a certain class or not. However, we cannot use c neurons with sigmoid activations anymore. When we have a one-hot encoded vector, the sum of the elements is always equal to one (since only one of them can be one). It also means that these elements are not independent of each other. When one of these elements becomes one, the others are forced to be zero.

现在我们的输出层应该有c个神经元,所以n ^ [L] = c ,并且 每个神经元的激活指示输入是否属于某个类别。 但是,我们不能再使用具有S型激活的c神经元。 当我们有一个热编码的矢量时,元素的总和总是等于1(因为只有一个可以是1)。 这也意味着这些元素不是彼此独立的。 当这些元素之一变为1时,其他元素被迫设为零。

However, the probabilities produced by c neurons with sigmoid activation functions in the output layer are independent and are not constrained to sum to one. That’s because these activation functions work independently. What we would like to have is a categorical probability distribution for the output vector. So they should be constrained to sum to one. We use the softmax activation function for this purpose.

但是,由c神经元在输出层中具有S型激活函数产生的概率是独立的,并且不是 约束总和为一。 这是因为这些激活功能是独立工作的。 我们想要的是输出向量的分类概率分布。 因此,应将它们限制为合计。 为此,我们使用softmax激活功能 。

The softmax activation function is always added to the last year of neurons (Figure 14), and the last layer with this activation function is called a softmax layer. It has a big difference with the other activation functions mentioned before. It cannot be applied to each neuron independently, instead, it combines the net input of all neurons to calculate their activations.

softmax激活功能始终添加到神经元的最后一年(图14),具有此激活功能的最后一层称为softmax层 。 它与前面提到的其他激活功能有很大的不同。 它不能单独应用于每个神经元,相反,它将所有神经元的净输入组合起来以计算其激活。

The softmax layer is shown in Figure 14 and it looks a little different. Here each circle shows a neuron in the last layer, but the output of each circle is the net input of that neuron, not its activation. These net inputs then go into the softmax activation pictured with a rectangle, and the output of softmax is the activation of the individual neurons.

softmax层如图14所示,看起来有些不同。 在这里,每个圆圈在最后一层显示一个神经元,但是每个圆圈的输出是该神经元的净输入,而不是其激活。 这些净输入然后进入用矩形表示的softmax激活,而softmax的输出是单个神经元的激活。

The output of the softmax layer (which is indeed the activations of the neurons) is the vector a which has the same number of elements as z (net input vector) and is defined as

softmax层的输出(实际上是神经元的激活)是向量a ,它的元素数量与z (净输入向量)相同 并定义为

So the softmax layer normalizes its input by dividing each element by the sum of all the elements in the input vector. The exponential function always gives a positive result, and a_i will be positive even if z_i is not. As a result, the activations of the softmax layer is a set of positive numbers that sum up to 1

因此,softmax层通过将每个元素除以输入向量中所有元素的总和来归一化其输入。 指数函数始终给出正结果,即使z_i不是, a_i也将为正。 结果,softmax层的激活是一组正数,总和为1

and it can be thought of as a probability distribution.

可以认为是概率分布。

Now by applying the softmax function to the net input of the last layer of neurons we get a normalized activation vector. The maximum element determines to which class the input vector belongs. So in this way, we can convert the activation vector of softmax into the binary output which is a one-hot encoded vector. For example, if the activation vector of the softmax layer is [0.5 0.15 0.35]^T it will be converted to the binary output vector [1 0 0]^T. For a multiclass problem with c classes, we use a softmax layer with c neurons as the output layer (Figure 15).

现在,通过将softmax函数应用于神经元最后一层的净输入,我们得到了归一化的激活向量。 最大元素确定输入向量属于哪个类。 因此,通过这种方式,我们可以将softmax的激活向量转换为二进制输出,即一个单编码的向量。 例如,如果softmax层的激活向量为[0.5 0.15 0.35] ^ T,它将被转换为二进制输出向量[1 0 0] ^ T。 对于c类的多类问题,我们使用带有c个神经元的softmax层作为输出层(图15)。

To understand where the softmax function comes from, we should first define the hardmax function. We use hardmax (also called argmax) to determine which element in a vector has the highest value. The hardmax takes a vector z, and returns another vector a. If z_i is the maximum element of z, then a_i=1 otherwise a_i=0. So for example

要了解softmax函数的来源,我们首先应定义hardmax函数。 我们使用hardmax(也称为argmax )来确定向量中哪个元素的值最高。 hardmax取一个向量z ,并返回另一个向量a 。 如果z_i是z的最大元素,则a_i = 1,否则a_i = 0。 所以举个例子

Here if z has more than one maximum element, then 1 will be divided between them. So if it has p maximum elements, then a_i=1/p for all of them. For example

在这里,如果z具有多个最大元素,则将在它们之间除以1。 因此,如果它具有p个最大元素,则所有元素的a_i = 1 / p 。 例如

With the above definition, the output elements of hardmax are constrained to sum to one. Softmax is rather a smooth approximation to the hardmax function. We can write the softmax function in a more general form as:

通过以上定义,hardmax的输出元素被约束为总和。 Softmax是Hardmax函数的平滑近似。 我们可以用更通用的形式编写softmax函数,如下所示:

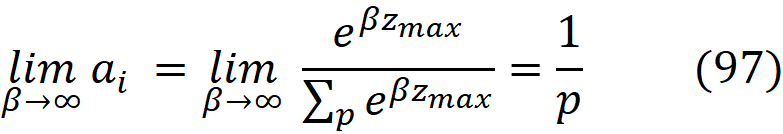

where β is a constant. Now we are going to see what happens if β goes to infinity. Suppose that z has p maximum elements and their values is equal to z_max. Now if z_i is one of those maximum elements

其中β是常数。 现在我们来看看如果β变为无穷大会发生什么。 假设z具有p个最大元素,并且它们的值等于z_max 。 现在,如果z_i是这些最大元素之一

where in the denominator, only the elements with the biggest exponent (z_max) are taken into account when β goes to infinity. If z_i is not one of the maximum elements, then we have

在分母中,当β变为无穷大时,仅考虑具有最大指数( z_max )的元素。 如果z_i不是最大元素之一,那么我们有

So in fact the general softmax function converges to the hardmax as β goes to infinity, and for β=1 it is a smooth approximation of the hardmax. Hardmax is not a continuous function, so it is not differentiable. As we show later the activation function needs to be differentiable to be used with the learning algorithm, so softmax which is differentiable is used instead.

So in fact the general softmax function converges to the hardmax as β goes to infinity, and for β =1 it is a smooth approximation of the hardmax. Hardmax is not a continuous function, so it is not differentiable. As we show later the activation function needs to be differentiable to be used with the learning algorithm, so softmax which is differentiable is used instead.

We also need to calculate the derivative of softmax. Here a_i is a function of all the elements of z. So we take the derivative of a_i with respect to z_j (which can be any elements of z). Now if i=j, all z_k with k≠j are considered to be a constant and their derivative with respect to z_j will be zero

We also need to calculate the derivative of softmax. Here a_i is a function of all the elements of z . So we take the derivative of a_i with respect to z_j (which can be any elements of z ). Now if i=j , all z_k with k ≠ j are considered to be a constant and their derivative with respect to z_j will be zero

If i≠j:

If i ≠ j :

So finally we have

So finally we have

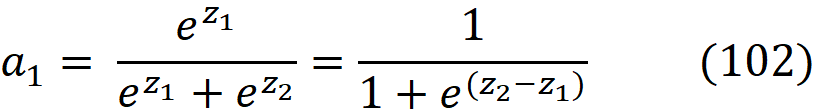

Softmax is actually a mathematical generalization of the sigmoid function which can be used for multiclass classification under the assumption that the classes are mutually exclusive. Sigmoid is equivalent to a 2-element Softmax function in which we have only two mutually exclusive classes. Let’s call them c_1 and c_2. Since we have two classes, the input vector (z) and the activation vector of the softmax layer (a) should also have two elements. Now we can write

Softmax is actually a mathematical generalization of the sigmoid function which can be used for multiclass classification under the assumption that the classes are mutually exclusive. Sigmoid is equivalent to a 2-element Softmax function in which we have only two mutually exclusive classes. Let's call them c_1 and c_2 . Since we have two classes, the input vector ( z ) and the activation vector of the softmax layer ( a ) should also have two elements. Now we can write

But the activation vector of softmax is normalized, so

But the activation vector of softmax is normalized, so

and by combining Eqs. 102, 103, and 104, we have

and by combining Eqs. 102, 103, and 104, we have

Here we have one equation with two unknowns which is underdetermined and has an infinite number of solutions. Hence we can fix one of its unknowns. We assume that z_2=0, so we have

Here we have one equation with two unknowns which is underdetermined and has an infinite number of solutions. Hence we can fix one of its unknowns. We assume that z_2 =0, so we have

which is the sigmoid activation function for z_1 (remember Eq. 6). So Sigmoid is equivalent to a 2-element softmax where the second element is assumed to be zero.

which is the sigmoid activation function for z_1 (remember Eq. 6). So Sigmoid is equivalent to a 2-element softmax where the second element is assumed to be zero.

Vectorizing the labels and outputs

Vectorizing the labels and outputs

We can define the label matrix

We can define the label matrix

which combines the label vectors for all the examples. We will use the label matrix later. Of course, if we have a binary classification problem or a regression problem, the output label is a scalar. To have a consistent notation, we can assume that it is a matrix with just one row

which combines the label vectors for all the examples. We will use the label matrix later. Of course, if we have a binary classification problem or a regression problem, the output label is a scalar. To have a consistent notation, we can assume that it is a matrix with just one row

Similar to the label matrix we can define the output matrix which combines the network’s output vectors for all the examples

Similar to the label matrix we can define the output matrix which combines the network's output vectors for all the examples

Again for binary classification or a regression problem we have

Again for binary classification or a regression problem we have

Cost function

Cost function

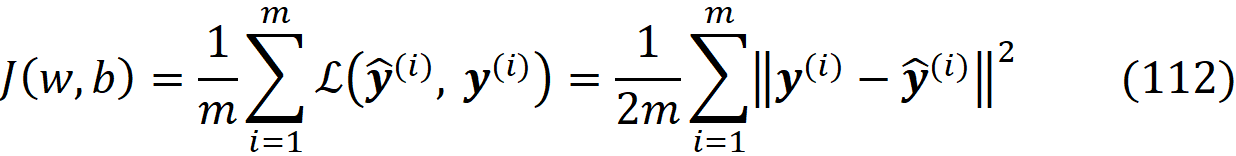

Remember that the output of the network is yhat. For each example x^(i) the output or the network prediction is yhat^(i), and ideally we want y^(i)= yhat^(i). The loss (or error) function is a function that measures the output error. It tells us how far the network output yhat is from the true label y^(i). The quadratic loss function is defined as

Remember that the output of the network is yhat . For each example x ^(i) the output or the network prediction is yhat ^(i) , and ideally we want y ^(i) = yhat ^(i) . The loss (or error) function is a function that measures the output error. It tells us how far the network output yhat is from the true label y ^(i) . The quadratic loss function is defined as

The loss function gives us the error for one specific example. However, we need the average error for all the examples since we want the network to learn all of them together. So we define the quadratic cost function as the average of the loss function of all the examples

The loss function gives us the error for one specific example. However, we need the average error for all the examples since we want the network to learn all of them together. So we define the quadratic cost function as the average of the loss function of all the examples

It is it’s also known as the mean squared error (MSE) cost function. J is still a function of y^(i) and yhat^(i). But we know that the network output yhat^(i) is a function of network parameters itself. So the cost function is in fact function of these parameters. Here, w and b (without indices) denote the collection of the weights and biases of all the neurons in the network. Please note that both the loss function and the cost function are scalar functions (they return a scalar quantity). If we only have one neuron at the output layer, then Eqs. 111 and 112 become

It is it's also known as the mean squared error (MSE) cost function. J is still a function of y ^(i) and yhat ^(i) . But we know that the network output yhat ^(i) is a function of network parameters itself. So the cost function is in fact function of these parameters. Here, w and b (without indices) denote the collection of the weights and biases of all the neurons in the network. Please note that both the loss function and the cost function are scalar functions (they return a scalar quantity). If we only have one neuron at the output layer, then Eqs. 111 and 112 become

w and b are the adjustable parameters of the network and when we train a neural network, the goal is to find weights and biases that minimize the cost function J(w, b). When the cost function is minimized, we expect to have the minimum classification error for the training set. If we multiply a function with a positive multiplier a, the minimum of aJ(w,b) occurs at the same values of w,b as does the minimum of J(w,b). So the multiplier 1/2 in Eq. 112 has no effect on the minimization of the cost function, and it is usually added to ease the calculations. The MSE cost function is the default cost function for the regression problems.

w and b are the adjustable parameters of the network and when we train a neural network, the goal is to find weights and biases that minimize the cost function J(w, b) . When the cost function is minimized, we expect to have the minimum classification error for the training set. If we multiply a function with a positive multiplier a , the minimum of aJ(w,b) occurs at the same values of w,b as does the minimum of J(w,b) . 所以 the multiplier 1/2 in Eq. 112 has no effect on the minimization of the cost function, and it is usually added to ease the calculations. The MSE cost function is the default cost function for the regression problems.

Cross-entropy cost function

Cross-entropy cost function

The quadratic cost function is not the only function that we can use for a neural network. In fact for a classification problem, we have a better choice called the cross-entropy function. It can be used when the activation of the neurons at the output layer are in the [0,1] range and can be thought of as a probability. So at the output layer, you should either have a single neuron with the sigmoid activation function (binary classification) or more than one neurons with the softmax activation function (multiclass classification).

The quadratic cost function is not the only function that we can use for a neural network. In fact for a classification problem, we have a better choice called the cross-entropy function. It can be used when the activation of the neurons at the output layer are in the [0,1] range and can be thought of as a probability. So at the output layer, you should either have a single neuron with the sigmoid activation function (binary classification) or more than one neurons with the softmax activation function (multiclass classification).

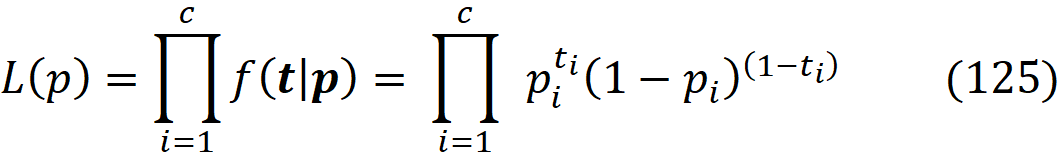

Cross-entropy can be defined using the likelihood function. In probability theory, the Bernoulli distribution is the discrete probability distribution of a random variable which can only take two possible values. We can label these values as ‘success’ and ‘failure’ or simply 1 and 0. An example is tossing a coin where the outcome is either heads or tails. Now suppose that this random variable which takes the value of 1 with probability p and the value of 0 with probability q=1-p. Here p is the parameter of the Bernoulli distribution. If we call this random variable T and use t for the values that it can take, the probability function of T can be written as follows

Cross-entropy can be defined using the likelihood function. In probability theory, the Bernoulli distribution is the discrete probability distribution of a random variable which can only take two possible values. We can label these values as 'success' and 'failure' or simply 1 and 0. An example is tossing a coin where the outcome is either heads or tails. Now suppose that this random variable which takes the value of 1 with probability p and the value of 0 with probability q=1-p . Here p is the parameter of the Bernoulli distribution. If we call this random variable T and use t for the values that it can take, the probability function of T can be written as follows

Here f(t|p) is the conditional probability of observing t as a value of T (T=t), given the parameter p. We can also combine the conditions of the previous equation into one equation by writing it as

Here f(t|p) is the conditional probability of observing t as a value of T (T=t) , given the parameter p . We can also combine the conditions of the previous equation into one equation by writing it as

When this probability function is regarded as a function of the parameter p, it is called the likelihood function

When this probability function is regarded as a function of the parameter p , it is called the likelihood function

Eq. 115 is for one random variable (or one data point). If we have m independent random variables T_1, T_2, … , T_m with the same Bernoulli distribution (or simply m data points), and t_1, t_2, . . . , t_k denote possible values of these variables, then the likelihood of observing T_1=t_1, T_2=t_2, …, T_m=t_m at the same time is the product of the likelihood of observing T_i=t_i for each data point. Mathematically, the likelihood of our data give parameter p is

Eq. 115 is for one random variable (or one data point). If we have m independent random variables T_1, T_2, … , T_m with the same Bernoulli distribution (or simply m data points), and t_1 , t_2 , . 。 。 , t_k denote possible values of these variables, then the likelihood of observing T_1=t_1, T_2=t_2, …, T_m=t_m at the same time is the product of the likelihood of observing T_i=t_i for each data point. Mathematically, the likelihood of our data give parameter p is

Now suppose that we know the value of t_i, but p is an unknown variable. We want to find the value of p which gives the highest probability for observing a specific value of t_i for each random variable T_i. One way is to find the value of p which maximizes L(p) with that specific values of t_i. In statistics, this method is called the maximum likelihood estimation. So we are looking for

Now suppose that we know the value of t_i , but p is an unknown variable. We want to find the value of p which gives the highest probability for observing a specific value of t_i for each random variable T_i . One way is to find the value of p which maximizes L(p) with that specific values of t_i . In statistics, this method is called the maximum likelihood estimation . So we are looking for

Argmax is short for Arguments of the Maxima. The argmax of a function is the value of the domain at which the function is maximized. So argmax_p gives the value of p that maximizes L(p). To make the equation simpler, we maximize the natural logarithm of L(p). Since the logarithm is a monotonic function, the maximum of ln L(p) occurs at the same value of p as does the maximum of L(p).

Argmax is short for Arguments of the Maxima. The argmax of a function is the value of the domain at which the function is maximized. So argmax_p gives the value of p that maximizes L(p) . To make the equation simpler, we maximize the natural logarithm of L(p). Since the logarithm is a monotonic function, the maximum of ln L(p) occurs at the same value of p as does the maximum of L(p) .

We call ln L(p) the log-likelihood. Using Eq. 116 we can write

We call ln L(p) the log-likelihood . 使用式 116 we can write

So we have

所以我们有

Now imagine that we have a single neuron with a sigmoid activation function. We have m examples in the training set. The neuron’s activation for example i is yhat^(i) and the true label is y^(i). Since we only have one neuron in the last layer, yhat^(i) is a scalar, not a vector. Here yhat^(i) is a variable that can be changed by changing the network parameters. As mentioned before we define a threshold to convert the activation into a binary output for a single neuron (the binary output is 1 if the activation is greater than the 0.5 and zero otherwise). We can think of yhat^(i) as the probability of getting 1 as the binary output of the neuron.

Now imagine that we have a single neuron with a sigmoid activation function. We have m examples in the training set. The neuron's activation for example i is yhat^(i) and the true label is y^(i) . Since we only have one neuron in the last layer, yhat^(i) is a scalar, not a vector. Here yhat^(i) is a variable that can be changed by changing the network parameters. As mentioned before we define a threshold to convert the activation into a binary output for a single neuron (the binary output is 1 if the activation is greater than the 0.5 and zero otherwise). We can think of yhat^(i) as the probability of getting 1 as the binary output of the neuron.

We can also think of the binary output of the neuron as a random variable that has a Bernoulli distribution and its parameter is yhat^(i). Now we want to have the log-likelihood of observing the true label y^(i) as the value of this random variable. So in Eq. 117, we can replace p by yhat^(i) and t_i by y^(i). Now we can write the log-likelihood function for this neuron as (for the whole training set)

We can also think of the binary output of the neuron as a random variable that has a Bernoulli distribution and its parameter is yhat^(i) . Now we want to have the log-likelihood of observing the true label y^(i) as the value of this random variable. So in Eq. 117, we can replace p by yhat^(i) and t_i by y^(i) . Now we can write the log-likelihood function for this neuron as (for the whole training set)

There is one more problem. In Eq. 49.5, the parameter p is the same for all the data points since they all follow the same Bernoulli distribution. However, here yhat^(i) may be different for each i since it is a function of the input matrix X^(i), and X^(i) is different for each example. What remains the same for all the data points is the network parameters w and b, and yhat^(i) is also a function of them. So when maximizing ln(L(p), we maximize it with respect to w and b instead of p

There is one more problem. 在等式中 49.5, the parameter p is the same for all the data points since they all follow the same Bernoulli distribution. However, here yhat^(i) may be different for each i since it is a function of the input matrix X ^(i) , and X ^(i) is different for each example. What remains the same for all the data points is the network parameters w and b , and yhat^(i) is also a function of them. So when maximizing ln(L(p) , we maximize it with respect to w and b instead of p

However, instead of maximizing ln L(yhat^(i)), we can minimize its negative

However, instead of maximizing ln L(yhat^(i)) , we can minimize its negative

to get the same result

to get the same result

As mentioned before, if we multiply a function with a positive multiplier a, the minimum of aC(w,b) occurs at the same values of w,b as does the minimum of C(w,b). So we can multiply the term on the right-hand side of Eq. 119 with 1/m and minimize that instead

As mentioned before, if we multiply a function with a positive multiplier a , the minimum of aC(w,b) occurs at the same values of w,b as does the minimum of C(w,b). So we can multiply the term on the right-hand side of Eq. 119 with 1/m and minimize that instead

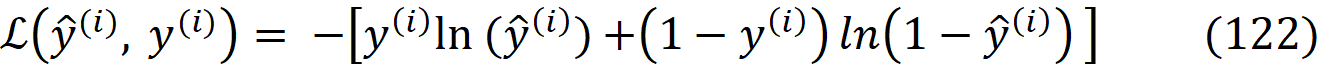

Now we can think of –ln L(yhat^(i)) as a new cost function that should be minimized and we call it the binary cross-entropy cost function

Now we can think of – ln L(yhat^(i)) as a new cost function that should be minimized and we call it the binary cross-entropy cost function

So minimizing this cost function minimizes the network’s error in predicting the true labels of the examples. Binary cross-entropy is the default cost function for a binary classification problem. In this equation, we can assume that the cost function is the average of the loss function over all the examples

So minimizing this cost function minimizes the network's error in predicting the true labels of the examples. Binary cross-entropy is the default cost function for a binary classification problem. In this equation, we can assume that the cost function is the average of the loss function over all the examples

This is similar to what we did in Eq. 112 for the quadratic cost function.

This is similar to what we did in Eq. 112 for the quadratic cost function.

If we have a multilabel classification with c classes, our output layer should have c neurons with sigmoid activation. Each neuron gives the value of one of the elements of the multi-hot encoded label vector y^(i). These neurons work independently, so each of them can use a binary cross-entropy cost function. Suppose that we have c independent random variables {T_j; j=1..c} with a Bernoulli distribution. We can show them by the random vector

If we have a multilabel classification with c classes, our output layer should have c neurons with sigmoid activation. Each neuron gives the value of one of the elements of the multi-hot encoded label vector y ^(i) . These neurons work independently, so each of them can use a binary cross-entropy cost function. Suppose that we have c independent random variables { T_j; j=1..c } with a Bernoulli distribution. We can show them by the random vector

A random variable is usually shown by an uppercase letter, and since it is also a vector, it is an uppercase boldface letter, so please don’t confuse it with a matrix. The probability function of each random variable is

A random variable is usually shown by an uppercase letter, and since it is also a vector, it is an uppercase boldface letter, so please don't confuse it with a matrix. The probability function of each random variable is

where p_i is the distribution parameter of each random variable. These parameters can be represented by the vector

where p_i is the distribution parameter of each random variable. These parameters can be represented by the vector

Now we want to know the probability function of observing a specific value for each of these random variables at the same time. If T_1=t_1 and T_2=t_2,…,T_c=t_c, then the vector

Now we want to know the probability function of observing a specific value for each of these random variables at the same time. If T_1=t_1 and T_2=t_2,…,T_c=t_c , then the vector

can represent their values. Now since these random variables are independent, the likelihood function of observing T=t given p is

can represent their values. Now since these random variables are independent, the likelihood function of observing T = t given p is

We have m data points (each data point is a c-dimensional point here), and each of them can be represented by t^(i). In addition, we assume that for each data point we have a separate distribution parameter. So the likelihood function for m points will be

We have m data points (each data point is a c -dimensional point here), and each of them can be represented by t ^(i). In addition, we assume that for each data point we have a separate distribution parameter. So the likelihood function for m points will be

and the log-likelihood will be

and the log-likelihood will be

Again we can think of the binary output of a neuron as a random variable that has a Bernoulli distribution and its parameter is yhat^(i). Now we want to calculate the log-likelihood of observing the label vector y^(i) for the neurons of the last layer. So in Eq. 127, we can replace p_i^(j) by yhat_i^(j) and t_i^(j) by y_i^(j), and c by n^[L]. Now we can write the log-likelihood function for the last layer and for the whole training set as

Again we can think of the binary output of a neuron as a random variable that has a Bernoulli distribution and its parameter is yhat^(i) . Now we want to calculate the log-likelihood of observing the label vector y ^(i) for the neurons of the last layer. So in Eq. 127, we can replace p_i^(j) by yhat_i^(j) and t_i^(j) by y_i^(j) , and c by n^[L] . Now we can write the log-likelihood function for the last layer and for the whole training set as

We minimize

We minimize

with respect to w and b. So the cost function (with the addition of the multiplier 1/m) will be

with respect to w and b . So the cost function (with the addition of the multiplier 1/ m ) will be

It is the average of this loss function

It is the average of this loss function

over all the training examples.

over all the training examples.

But what happens if we have a multiclass problem with more than one neuron at the output layer? Here we can use the multinomial distribution. Suppose that we have a discrete random variable T that can take c different values from the set {1, 2, …, c}, so it’s like a c-sided dice. The probability that it takes the value i is p_i, and

But what happens if we have a multiclass problem with more than one neuron at the output layer? Here we can use the multinomial distribution . Suppose that we have a discrete random variable T that can take c different values from the set {1, 2, …, c }, so it's like a c -sided dice. The probability that it takes the value i is p_i , and

We can use a one-hot encoded vector to show the current state of this random vector. So we have the vector t with c elements, and when T=j, the j-th element of t will be equal to 1 while the other elements are zero

We can use a one-hot encoded vector to show the current state of this random vector. So we have the vector t with c elements, and when T=j , the j -th element of t will be equal to 1 while the other elements are zero

So the original random variable T can be represented by a random vector T that can take the different values of t. Since the probability of T=j is p_j, the probability of having t with t_j=1 is the same.

So the original random variable T can be represented by a random vector T that can take the different values of t . Since the probability of T=j is p_j , the probability of having t with t_j =1 is the same.

Since all the elements except the j-th should be zero, we can write

Since all the elements except the j -th should be zero, we can write

If we multiply Eq. 132 by Eq. 133 we get

If we multiply Eq. 132 by Eq. 133 we get

So the probability function that we observe the equivalent one-hot encoded vector of t for the random vector T given the parameters p is

So the probability function that we observe the equivalent one-hot encoded vector of t for the random vector T given the parameters p is

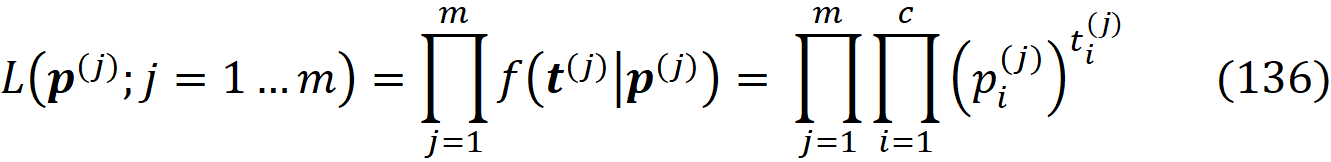

Eq. 135 is a special case of a multinomial distribution. Here each data point is a k-dimensional point (t_1, t_2,.., t_c) which is indicated by vector t. So in fact this equation is still for one data point. If we have m data points t^(1), t^(2), …, t^(m), the likelihood will be

Eq. 135 is a special case of a multinomial distribution. Here each data point is a k -dimensional point (t _1 , t_2 ,.., t_c ) which is indicated by vector t . So in fact this equation is still for one data point. If we have m data points t^ (1) , t^ (2) , …, t^ (m) , the likelihood will be

So the log-likelihood becomes

So the log-likelihood becomes

Now We have to minimize the negative of the log-likelihood

Now We have to minimize the negative of the log-likelihood

Now assume that we have a softmax layer with n^[L] neurons at the last layer. Our label vector for example x^(j) is y^(j) which has n^[L] elements. The softmax activation of neuron i for example j is yhat_i^(j) and the true label is the i-th element of y^(j) which is equal to y_i^(j).

Now assume that we have a softmax layer with n^[L] neurons at the last layer. Our label vector for example x ^(j) is y ^(j) which has n^[L] elements. The softmax activation of neuron i for example j is yhat_i^(j) and the true label is the i -th element of y ^(j) which is equal to y_i^(j) .

Again we can think of yhat_i^(j) as the probability of getting 1 as the binary output of neuron i (for example j). So can also think of the binary output of the softmax layer as a random vector that has a multinomial distribution and its parameter is yhat_i^(j). Each label vector is a possible vector that this random vector can take. Now we want to calculate the log-likelihood that this random vector takes the values of vector y^(i). So in Eq. 138, we can replace p_i^(j) by yhat_i^(j) and t_i^(j) by y_i^(j) and c by n^[L], add 1/m as a multiplier, and minimize it with respect to w and b

Again we can think of yhat_i^(j) as the probability of getting 1 as the binary output of neuron i (for example j ). So can also think of the binary output of the softmax layer as a random vector that has a multinomial distribution and its parameter is yhat_i^(j) . Each label vector is a possible vector that this random vector can take. Now we want to calculate the log-likelihood that this random vector takes the values of vector y ^(i) . So in Eq. 138, we can replace p_i^(j) by yhat_i^(j) and t_i^(j) by y_i^(j) and c by n^[L] , add 1/ m as a multiplier, and minimize it with respect to w and b

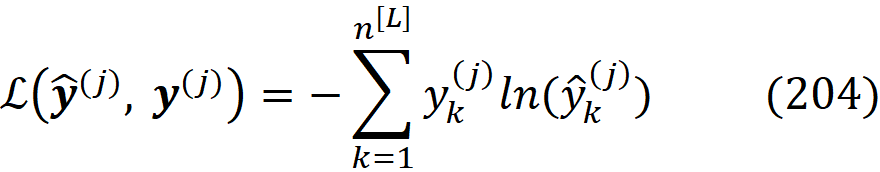

So we can write the cost function as

So we can write the cost function as

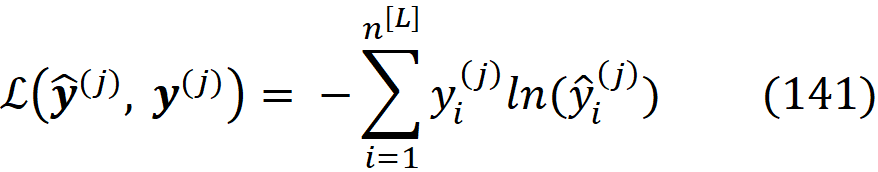

This is called the categorical cross-entropy cost function which the default cost function for the multiclass classification problems. Again we can assume that the cost function is the average of the loss function over all the examples

This is called the categorical cross-entropy cost function which the default cost function for the multiclass classification problems. Again we can assume that the cost function is the average of the loss function over all the examples

Gradient descent

Gradient descent

So far we learned that learning the true label of the examples is equivalent to minimizing the cost function with respect to the network adjustable parameters. Gradient descent is an optimization algorithm used to minimize a function by iteratively moving in the direction of the negative of the gradient of the function at the current point. Suppose that we have a function f(x_1, x_2, …, x_n) and we want to minimize it with respect to all its variables. We define the vector x as

So far we learned that learning the true label of the examples is equivalent to minimizing the cost function with respect to the network adjustable parameters. Gradient descent is an optimization algorithm used to minimize a function by iteratively moving in the direction of the negative of the gradient of the function at the current point. Suppose that we have a function f(x_1, x_2, …, x_n) and we want to minimize it with respect to all its variables. We define the vector x as

So each set of values for {x1, x2, …, xn} can be represented by a point in an n-dimensional space, and the vector x refers to that point. We can assume that function f is a function of this vector

So each set of values for { x1, x2, …, xn } can be represented by a point in an n-dimensional space, and the vector x refers to that point. We can assume that function f is a function of this vector

Now we start with an initial point represented by x_initial, and from this n-dimensional point, we want to move iteratively toward the point x_min that minimizes f(x). So we start at x_initial, and at each step, we find a new point using the previous point

Now we start with an initial point represented by x_initial , and from this n-dimensional point, we want to move iteratively toward the point x_min that minimizes f( x ) . So we start at x_initial , and at each step, we find a new point using the previous point

We keep replacing our current point with a new point using this equation till we get close enough to x_min (within a tolerance). Δx is something that still needs to be determined and the gradient descent method tells us how to choose it. From calculus, we know that

We keep replacing our current point with a new point using this equation till we get close enough to x_min (within a tolerance). Δ x is something that still needs to be determined and the gradient descent method tells us how to choose it. From calculus, we know that

where dx_i means infinitesimal changes in x_i. For a relatively small change in xi, we can write

where dx_i means infinitesimal changes in x_i . For a relatively small change in xi , we can write

Using the definition of the gradient (Eq. 41) and the dot product (Eq. 24), we write the previous equation as

Using the definition of the gradient (Eq. 41) and the dot product (Eq. 24), we write the previous equation as

We want to move in a direction that gives the biggest decrease in Δf. Now, remember that the minimum value of the dot product of two vectors is when the vectors are in the opposite direction. So Δx should be in the direction of -Δf, however, we are free to choose its magnitude. So we can write Δx=-αΔf where α is a scalar multiplier that can change the magnitude of Δx. So we can write Eq. 144 as

We want to move in a direction that gives the biggest decrease in Δ f. Now, remember that the minimum value of the dot product of two vectors is when the vectors are in the opposite direction. So Δ x should be in the direction of -Δ f , however, we are free to choose its magnitude. So we can write Δ x= -α Δ f where α is a scalar multiplier that can change the magnitude of Δ x . So we can write Eq. 144 as

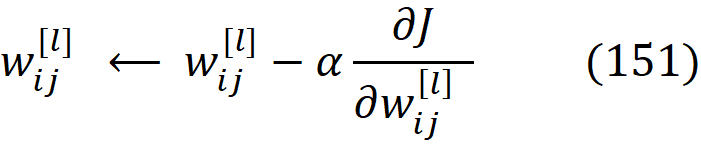

α is called the learning rate, and it is allowed to change at every iteration. We can also write Eq. 148 for each element of x to get

α is called the learning rate , and it is allowed to change at every iteration. We can also write Eq. 148 for each element of x to get

Now we can get back to our cost function C(w,b). Here J is a function of all weights and biases in the network. So we can write

Now we can get back to our cost function C(w,b) . Here J is a function of all weights and biases in the network. So we can write

for all possible values of i, j and l. Now if we assume that we have p adjustable parameters in the network (all the weights and biases in all layers together), these parameters form a p-dimensional space and we can use Eq. 149 for each of them

for all possible values of i , j and l . Now if we assume that we have p adjustable parameters in the network (all the weights and biases in all layers together), these parameters form a p -dimensional space and we can use Eq. 149 for each of them

These equations can be also written in vector form

These equations can be also written in vector form

In Eq. 147 the value of Δx should be small enough to have a good approximation of Δf. As a result, the learning rate (α) shouldn’t be too big. Otherwise, we may end up with a big step that can even increase f (Δf > 0). In addition, if α is too small, it makes the steps toward the minimum point too short, and thus the gradient descent algorithm will work very slowly.

在等式中 147 the value of Δ x should be small enough to have a good approximation of Δf . As a result, the learning rate ( α ) shouldn't be too big. Otherwise, we may end up with a big step that can even increase f ( Δf > 0). In addition, if α is too small, it makes the steps toward the minimum point too short, and thus the gradient descent algorithm will work very slowly.

Backpropagation

Backpropagation

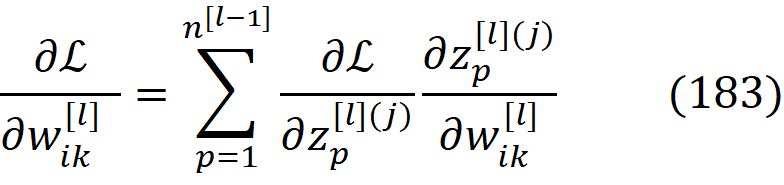

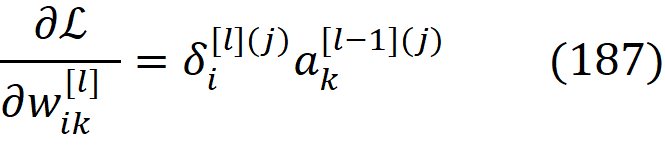

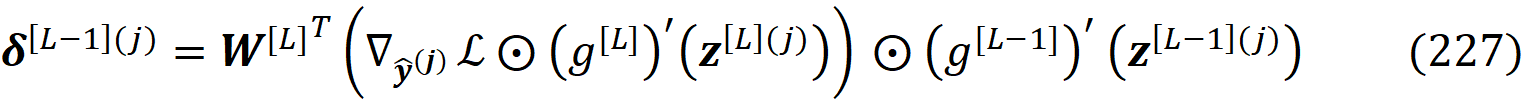

To use the gradient descent method defined by Eqs. 151 and 152, we need to calculate the partial derivative or gradient of the cost function with respect to w and c, and to do that we use an algorithm named backpropagation. So the main goal of backpropagation is to compute

To use the gradient descent method defined by Eqs. 151 and 152, we need to calculate the partial derivative or gradient of the cost function with respect to w and c , and to do that we use an algorithm named backpropagation . So the main goal of backpropagation is to compute

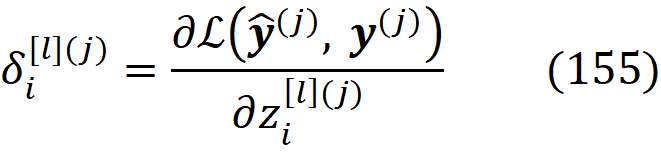

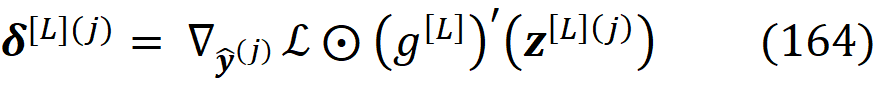

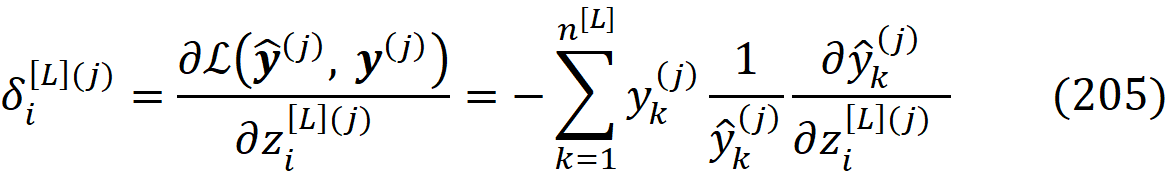

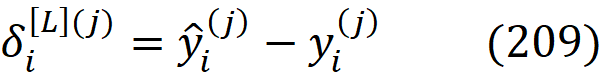

In the backpropagation method, we first introduce an intermediate quantity

In the backpropagation method, we first introduce an intermediate quantity

which is called the error of the i-th neuron in the l-th layer. Using the definition of the gradient (Eq. 41), the previous equation can be written as

which is called the error of the i -th neuron in the l -th layer. Using the definition of the gradient (Eq. 41), the previous equation can be written as

in vector form. We may write the loss function without its arguments and the index of the training example in some of the equations like Eq .156, but you should note that the loss function is always related to a single example.

in vector form. We may write the loss function without its arguments and the index of the training example in some of the equations like Eq .156, but you should note that the loss function is always related to a single example.

The error vector and z^[l] have the same number of elements and using Eq. 41 the error vector for layer l can be also written as

The error vector and z ^[l] have the same number of elements and using Eq. 41 the error vector for layer l can be also written as

It is important to note that the error vector is defined for a single training example j. The error for a specific neuron relates the change in the neuron’s net input to the change in the loss function. If we change a neuron’s net input from

It is important to note that the error vector is defined for a single training example j . The error for a specific neuron relates the change in the neuron's net input to the change in the loss function. If we change a neuron's net input from

to

至

the neuron’s output changes to be